Introduction: Why Containers Matter

Hey there! If you've been in the tech world lately, you've probably heard the buzz around containers and Docker. But what's the big deal? Well, before containers, developers faced the classic problem: "It works on my machine!" This frustrating reality led to countless hours debugging environment issues rather than building cool features.

Containers solve this by packaging applications with everything they need to run – code, runtime, libraries, and settings – ensuring consistent behavior across different environments. Whether you're running your app on your laptop, a test server, or in production, containers make sure it works the same way everywhere.

What is Docker?

Docker is the most popular containerization platform that has revolutionized how we build, ship, and run applications. Think of Docker as a standardized shipping container for software – just as physical shipping containers transformed global trade by making it easy to move goods, Docker containers make it easy to move software.

Docker's Core Concepts

At its heart, Docker is built around several key concepts:

-

Images: These are read-only templates that contain everything needed to run an application. Think of an image as a snapshot of a container that you can share and reuse.

-

Containers: These are runnable instances of images. A container isolates an application and its dependencies from the host system and other containers.

-

Dockerfile: This is a text file with instructions for building a Docker image, similar to a recipe for creating your container.

-

Registry: A registry stores Docker images. Docker Hub is the public registry, but you can also set up private registries.

Docker Architecture and Workflow

Docker uses a client-server architecture. The Docker client communicates with the Docker daemon, which builds, runs, and manages containers. Here's a simplified workflow:

- You create a Dockerfile that defines your application environment

- You build an image from this Dockerfile

- You run a container from this image

- You can share the image via a registry so others can run containers from it

Let's look at a simple example. Imagine you want to containerize a Python web application:

# Dockerfile example

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

EXPOSE 5000

CMD ["python", "app.py"]

Then you'd build and run it:

# Build the image

docker build -t my-python-app .

# Run a container from the image

docker run -p 5000:5000 my-python-app

Voilà! Your application is running in a container, completely isolated from the host system.

Docker Compose: Managing Multi-Container Applications

While Docker is great for single containers, most real-world applications consist of multiple interconnected services. For example, a typical web application might include:

- A frontend web server

- A backend API

- A database

- A caching service

- A message queue

Managing all these containers individually would be tedious. That's where Docker Compose comes in.

What is Docker Compose?

Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application's services, networks, and volumes. Then, with a single command, you create and start all the services from your configuration.

Docker Compose Key Features

- Service definition: Define each component of your application as a service

- Networking: Automatic creation of a network for your application

- Volume management: Persist data between container restarts

- Environment variables: Configure services differently in different environments

- Dependency management: Control the startup order of services

Getting Started with Docker Compose

Installation

Docker Compose is included with Docker Desktop for Windows and Mac. For Linux, you might need to install it separately. Check if it's installed:

docker-compose --version

Creating a docker-compose.yml File

The heart of Docker Compose is the docker-compose.yml file. Here's a basic example for a web application with a database:

version: '3'

services:

web:

build: ./web

ports:

- "8000:8000"

depends_on:

- db

environment:

- DATABASE_URL=postgres://postgres:password@db:5432/mydb

db:

image: postgres:13

volumes:

- postgres_data:/var/lib/postgresql/data

environment:

- POSTGRES_USER=postgres

- POSTGRES_PASSWORD=password

- POSTGRES_DB=mydb

volumes:

postgres_data:

This configuration:

- Defines two services:

webanddb - Builds the

webservice from a Dockerfile in the ./web directory - Uses the official PostgreSQL image for the

dbservice - Maps port 8000 on your host to port 8000 in the web container

- Sets up environment variables for database connection

- Creates a persistent volume for the database data

Running Your Application with Docker Compose

Once your docker-compose.yml file is ready, you can start your application with:

docker-compose up

This command builds any missing images, creates containers, and starts them. Add the -d flag to run in detached mode (background):

docker-compose up -d

To stop your application:

docker-compose down

Add --volumes to remove the volumes as well:

docker-compose down --volumes

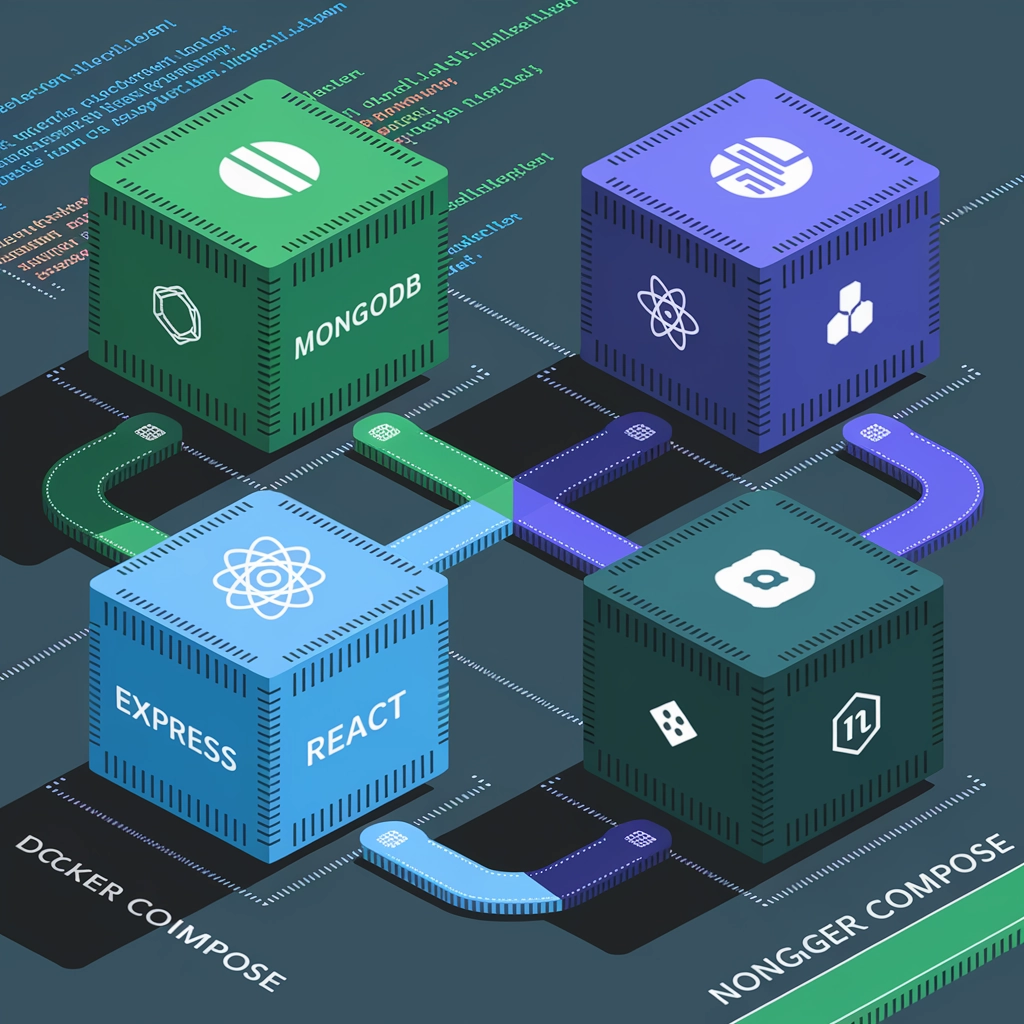

Real-World Docker Compose Example: MERN Stack

Let's look at a more concrete example for a MERN (MongoDB, Express, React, Node.js) stack application:

version: '3'

services:

frontend:

build: ./client

ports:

- "3000:3000"

depends_on:

- backend

environment:

- REACT_APP_API_URL=http://localhost:5000

backend:

build: ./server

ports:

- "5000:5000"

depends_on:

- mongo

environment:

- MONGO_URI=mongodb://mongo:27017/myapp

- PORT=5000

mongo:

image: mongo:latest

ports:

- "27017:27017"

volumes:

- mongo_data:/data/db

volumes:

mongo_data:

Advanced Docker Compose Features

Scaling Services

Need to run multiple instances of a service? Docker Compose makes it easy:

docker-compose up --scale backend=3

This runs three instances of the backend service.

Health Checks

You can add health checks to ensure your services are truly ready:

services:

web:

image: myapp

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:8000/health"]

interval: 30s

timeout: 10s

retries: 3

Networks

By default, Docker Compose creates a network for your application. You can also define custom networks:

services:

web:

networks:

- frontend

- backend

db:

networks:

- backend

networks:

frontend:

backend:

Best Practices for Docker and Docker Compose

-

Keep images small: Use multi-stage builds and minimal base images like Alpine.

-

Don't run as root: Use the

USERinstruction in your Dockerfile to run as a non-root user. -

Use .dockerignore: Like .gitignore, this keeps unnecessary files out of your images.

-

Set resource limits: Prevent containers from consuming too many resources:

services:

web:

deploy:

resources:

limits:

cpus: '0.5'

memory: 512M

-

Use environment variables wisely: Use .env files for local development and secrets management in production.

-

Version control your Docker files: Keep Dockerfiles and docker-compose.yml files in version control.

Common Pitfalls and Troubleshooting

-

Container connectivity issues: Make sure services can resolve each other by service name.

-

Persistence problems: Remember that containers are ephemeral; use volumes for data that needs to persist.

-

Build context too large: A large build context slows down builds. Use .dockerignore.

-

Networking confusion: By default, services can reach each other by name, but external access requires port mapping.

-

Resource constraints: Containers sharing a host compete for resources. Set appropriate limits.

Conclusion: Container Power Unleashed

Docker and Docker Compose have transformed how we develop, test, and deploy applications. By packaging applications consistently and simplifying multi-service orchestration, they help solve the "works on my machine" problem once and for all.

Whether you're a solo developer working on a side project or part of a large team building complex microservices, mastering Docker and Docker Compose will make your development process smoother and more reliable.

Ready to take your Docker skills to the next level? Check out our Kubernetes courses to learn how to orchestrate containers at scale, or explore our AWS content to see how containers fit into cloud deployments.

Happy containerizing!

1 Comment

Your comment is awaiting moderation.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me. https://accounts.binance.info/register-person?ref=IXBIAFVY

Your comment is awaiting moderation.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Your comment is awaiting moderation.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article. https://accounts.binance.info/cs/register-person?ref=OMM3XK51

Your comment is awaiting moderation.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you. https://accounts.binance.com/ro/register-person?ref=HX1JLA6Z

[…] Ready to dive deeper into DevOps concepts? Check out our guide to CI/CD pipelines or learn about Docker Compose for multi-service applications. […]