Introduction: Beyond the Container Revolution

So you've mastered Docker containers (if not, check out our previous article in this series), and now you're wondering, "What's next?" Well, containers are awesome, but when you're running dozens, hundreds, or even thousands of them across multiple environments, things get complicated fast. That's where Kubernetes comes in—it's like the conductor of your container orchestra, making sure every instrument plays its part at the right time.

In this guide, we'll break down what Kubernetes is, how it works, and why it has become the go-to solution for container orchestration in modern DevOps. No fancy jargon (well, maybe some, but we'll explain it)—just practical insights to help you understand why Kubernetes matters and how to start thinking about it.

What Is Kubernetes and Why Should You Care?

Kubernetes (often abbreviated as K8s—8 represents the eight letters between 'K' and 's') is an open-source platform designed to automate the deployment, scaling, and management of containerized applications. It was originally developed by Google and is now maintained by the Cloud Native Computing Foundation.

Think of Kubernetes as a super-smart manager for your containers that handles:

- Deploying your applications

- Scaling them up or down based on demand

- Rolling out updates without downtime

- Self-healing when things go wrong

- Load balancing traffic between containers

- Managing storage for your applications

In a world where applications need to be always available, easily scalable, and quickly deployable, Kubernetes provides the foundation that makes this possible. It's why companies from startups to enterprises are adopting it as part of their DevOps transformation.

The Kubernetes Architecture: How It All Fits Together

Let's break down the key components that make up a Kubernetes cluster:

Control Plane (Master Node)

The control plane is the brain of Kubernetes. It makes global decisions about the cluster and detects/responds to events. Components include:

- API Server: The front door to Kubernetes. All commands, queries, and external communications go through here.

- etcd: A distributed key-value store that holds all cluster data—think of it as the cluster's memory.

- Scheduler: Decides which node should run which pod based on resource requirements and constraints.

- Controller Manager: Runs controller processes that regulate the state of the cluster, ensuring the desired state matches the actual state.

- Cloud Controller Manager: Integrates with underlying cloud providers if you're running in the cloud.

Worker Nodes

These are the machines that actually run your applications. Each worker node includes:

- Kubelet: The primary node agent that ensures containers are running in a pod.

- Container Runtime: The software responsible for running containers (like Docker or containerd).

- Kube-proxy: Maintains network rules and enables communication to your pods.

Kubernetes Objects

Kubernetes operates with several key objects that you'll interact with:

- Pods: The smallest deployable units in Kubernetes. A pod contains one or more containers that share storage and network resources.

- Deployments: Manage pods and provide declarative updates. You tell a Deployment how many replicas of a pod you want, and it ensures that number is maintained.

- Services: Provide a consistent way to access pods, regardless of where they're running or how many there are.

- ConfigMaps and Secrets: Store configuration data and sensitive information respectively.

- Persistent Volumes: Provide storage that lives beyond the lifecycle of a pod.

How Kubernetes Orchestrates Your Containers

Now that we understand the components, let's see how Kubernetes actually orchestrates containers:

1. Definition and Configuration

Everything in Kubernetes starts with a declarative configuration—usually YAML files that describe what you want your application to look like. For example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: my-image:latest

ports:

- containerPort: 8080

This tells Kubernetes: "I want three replicas of my-app running, using this container image, and exposing port 8080."

2. Scheduling

When you apply this configuration, the API server processes it and the scheduler determines the best node for each pod based on:

- Available resources (CPU, memory)

- Policy constraints

- Affinity/anti-affinity specifications

- Taints and tolerations

3. Container Lifecycle Management

Once pods are scheduled, Kubernetes manages their entire lifecycle:

- Creation: Pods are created on assigned nodes

- Health Monitoring: Regular checks ensure containers are healthy

- Scaling: Adjusting the number of pod replicas based on demand or manual configuration

- Updates: Rolling out new versions without downtime

- Termination: Gracefully shutting down pods when needed

4. Self-Healing Capabilities

One of Kubernetes' most powerful features is its ability to self-heal:

- If a container fails, Kubernetes restarts it

- If a node dies, Kubernetes reschedules affected pods to other nodes

- If a deployment doesn't have enough replicas, Kubernetes creates more

- If a pod doesn't respond to health checks, Kubernetes replaces it

This self-healing happens automatically without human intervention, making your applications more resilient.

Key Features That Make Kubernetes Powerful

Automatic Scaling

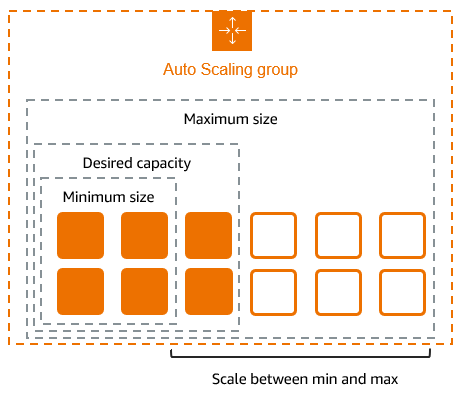

Kubernetes can scale your applications in two ways:

- Horizontal Pod Autoscaler (HPA): Automatically adjusts the number of pod replicas based on observed CPU utilization or other metrics.

- Vertical Pod Autoscaler (VPA): Adjusts CPU and memory reservations for pods to better match actual usage.

- Cluster Autoscaler: Works with your cloud provider to automatically adjust the size of your Kubernetes cluster.

Rolling Updates and Rollbacks

Need to update your application? Kubernetes has you covered:

kubectl set image deployment/my-app my-container=my-image:v2

This will gradually replace old pods with new ones, ensuring zero downtime. If something goes wrong:

kubectl rollout undo deployment/my-app

And you're back to the previous working version!

Service Discovery and Load Balancing

Kubernetes Services provide a stable endpoint for accessing your pods, even as they come and go:

- ClusterIP: Exposes the service on an internal IP

- NodePort: Exposes the service on each node's IP at a static port

- LoadBalancer: Exposes the service externally using a cloud provider's load balancer

- ExternalName: Maps the service to a DNS name

Resource Management

Kubernetes helps you make the most of your infrastructure:

- Request the resources your containers need

- Set limits to prevent resource hogging

- Efficiently pack containers onto nodes

- Define priority classes for important workloads

Practical Example: Deploying a Simple Web Application

Let's see a practical example of how you might deploy a web application with Kubernetes:

- Create a Deployment:

kubectl create deployment web-app --image=nginx:latest

- Scale it to 3 replicas:

kubectl scale deployment web-app --replicas=3

- Expose it as a Service:

kubectl expose deployment web-app --port=80 --type=LoadBalancer

- Check your deployment:

kubectl get pods

kubectl get services

Just like that, you've deployed a web server with three replicas and a load balancer in front of it!

Common Challenges and Best Practices

Challenges You Might Face

- Steep Learning Curve: Kubernetes has many concepts to grasp

- Resource Management: Properly sizing your requests and limits

- Networking Complexity: Understanding how pods communicate

- Storage Configuration: Setting up persistent storage correctly

- Security Concerns: Properly securing your cluster

Best Practices

- Start Small: Begin with simple deployments and gradually add complexity

- Use Namespaces: Organize your resources logically

- Implement Resource Limits: Prevent resource contention

- Leverage Labels and Annotations: For better organization and automation

- Automate Everything: Use CI/CD pipelines to deploy to Kubernetes

- Monitor Your Cluster: Implement robust monitoring and alerting

- Keep Your Cluster Updated: Regular updates include security patches and new features

Kubernetes in the Enterprise

Kubernetes isn't just for startups or tech giants—it's being adopted across industries:

- Financial Services: For scaling transaction processing systems

- Healthcare: Managing patient data and medical applications

- Retail: Handling seasonal traffic spikes

- Manufacturing: Running IoT and analytics workloads

- Media: Delivering content at scale

The flexibility and power of Kubernetes make it suitable for almost any application that benefits from containerization.

Conclusion: Your Next Steps with Kubernetes

Kubernetes might seem complex at first—and it is—but the benefits it provides for managing containerized applications are enormous. As containerization becomes the standard for application deployment, Kubernetes knowledge becomes increasingly valuable.

Ready to dive deeper? Here are some next steps:

- Set up a local Kubernetes cluster using Minikube or Kind

- Deploy a simple application and experiment with scaling and updates

- Learn about Helm charts for packaging Kubernetes applications

- Explore Kubernetes operators for managing complex applications

- Check out managed Kubernetes services like EKS (AWS), GKE (Google), or AKS (Azure)

Kubernetes is a journey, not a destination. The ecosystem is constantly evolving, bringing new tools and practices. But mastering the fundamentals we've covered here will give you a solid foundation to build upon.

Stay tuned for our next article in this series, where we'll explore Infrastructure as Code with Terraform and CloudFormation—the perfect complement to your Kubernetes knowledge!

Have questions about Kubernetes or want to share your experience? Drop a comment below or contact us directly. We'd love to hear from you!