Introduction: The Container Revolution

Remember the classic developer nightmare? "But it works on my machine!" For decades, this phrase haunted dev teams, causing deployment headaches, unexpected bugs, and late-night troubleshooting sessions. Enter Docker and container technology—the game-changing solution that's revolutionized how we build, ship, and run applications.

In today's DevOps landscape, containers have become as fundamental as version control. But what exactly makes these lightweight, portable environments so essential? Let's dive into the world of containers and discover why they've become the backbone of modern application development.

What Are Containers (And Why Should You Care)?

At their core, containers are lightweight, standalone packages that contain everything needed to run an application: code, runtime, system tools, libraries, and settings. Think of them as standardized shipping containers for software—regardless of what's inside, they can be transported and deployed anywhere.

Unlike traditional virtual machines that require a full operating system for each instance, containers share the host system's OS kernel, making them incredibly efficient and fast to deploy.

The "It Works on My Machine" Problem

Before containers, the journey from a developer's laptop to production was fraught with inconsistencies:

- Different OS versions between development and production

- Missing dependencies or conflicting library versions

- Variations in configuration settings

- Environment-specific bugs that were impossible to reproduce

These inconsistencies led to deployment failures, extended debugging sessions, and the dreaded "works on my machine" excuse. Containers solve this by packaging the application with everything it needs, ensuring it runs the same way regardless of where it's deployed.

6 Reasons Containers Matter in Modern DevOps

1. Isolation and Portability

Containers create isolated environments for applications, preventing conflicts between different services or dependencies. This isolation means that containerized applications can run anywhere—from a developer's laptop to a test server to production cloud infrastructure—without modification.

This portability eliminates environment-specific bugs and makes the "it works on my machine" problem a thing of the past. Developers, testers, and operations teams all work with identical environments, drastically reducing deployment failures.

2. Efficiency and Resource Utilization

Unlike virtual machines that require a full OS for each instance, containers share the host system's kernel and run as isolated processes. This architectural difference makes containers:

- Significantly lighter (megabytes vs. gigabytes)

- Faster to start (seconds vs. minutes)

- More resource-efficient (10-100x more containers per server than VMs)

For organizations, this efficiency translates to reduced infrastructure costs, better hardware utilization, and faster application scaling.

3. Enhanced Security Through Isolation

Containers provide strong default isolation capabilities, limiting what each container can access and preventing one compromised container from affecting others. Docker implements security features like:

- Process isolation

- File system isolation

- Network namespace separation

- Resource limitations (CPU, memory, I/O)

While containers aren't inherently secure without proper configuration, they provide excellent building blocks for creating secure application environments.

4. Accelerated Development and Testing

The consistency provided by containers transforms the development and testing process:

- Developers can work in environments identical to production

- QA teams can test in isolated, production-like settings

- Integration testing becomes more reliable

- CI/CD pipelines can build, test, and deploy consistently

This acceleration is particularly valuable for microservices architectures where multiple services need to be developed and tested independently before being integrated.

5. Standardization and Consistency

Docker has established itself as the industry standard for container technology, creating a common language and toolset for packaging and deploying applications. This standardization means:

- Applications run consistently across any infrastructure

- Teams can use the same workflows regardless of the underlying technology

- Tools and best practices can be shared across projects and organizations

In a field known for constant change, Docker's standardization brings welcome stability to application deployment processes.

6. Microservices Enablement

Containers and microservices architecture go hand-in-hand. By packaging each service as a separate container, teams can:

- Develop, deploy, and scale services independently

- Use different technologies for different services

- Isolate failures to specific services

- Replace or upgrade services without downtime

For complex applications, this decoupling of services creates more resilient, maintainable, and scalable systems.

How Docker Works: The Basics

To understand Docker's power, you need to grasp a few fundamental concepts:

Images vs. Containers

-

Docker Images: Think of these as blueprints or templates—read-only files containing the application code, libraries, dependencies, tools, and other files needed to run an application.

-

Containers: These are the running instances created from images. You can have multiple containers running from the same image, each with its own isolated environment.

Dockerfile: Infrastructure as Code

The Dockerfile is a simple text file that defines how to build a Docker image. It contains a series of commands like:

FROM node:14

WORKDIR /app

COPY . .

RUN npm install

EXPOSE 3000

CMD ["npm", "start"]

This approach treats infrastructure as code, making application environments reproducible, versionable, and shareable.

Docker Hub: The Container Registry

Docker Hub functions as a central repository for Docker images, similar to GitHub for code. Developers can:

- Share and download pre-built images

- Find official images for common technologies (Node.js, Python, etc.)

- Store private images for their organization

- Automate image builds when code changes

This ecosystem accelerates development by providing ready-to-use components for common application needs.

Docker in the DevOps Pipeline

Containers shine brightest when integrated into a complete DevOps workflow:

Development Phase

Developers work in containerized environments that match production, preventing environment-related bugs and eliminating the "works on my machine" problem.

Continuous Integration

In the CI process, containers ensure consistent build environments. Each build creates a new container image that's ready for testing and deployment.

Testing

Automated tests run in containers identical to production, making test results more reliable. Multiple versions of the application can be tested simultaneously in isolated environments.

Deployment

Containerized applications deploy consistently across environments—from development to staging to production. The same container that passed testing is deployed to production without modification.

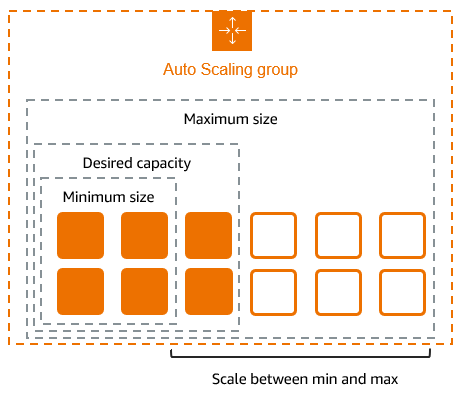

Scaling and Orchestration

Container orchestration tools like Kubernetes manage container deployment, scaling, networking, and availability. They enable:

- Automatic scaling based on demand

- Self-healing applications

- Rolling updates without downtime

- Efficient resource allocation

This orchestration layer is what makes containers truly enterprise-ready.

Getting Started with Docker: A Simple Example

Let's look at a basic example of containerizing a simple web application:

- Create a Dockerfile:

FROM nginx:alpine

COPY ./website /usr/share/nginx/html

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

- Build the image:

docker build -t my-website .

- Run the container:

docker run -p 8080:80 my-website

That's it! Your website is now running in a container, accessible on port 8080, and ready to be deployed anywhere Docker runs.

Overcoming Common Container Challenges

While containers offer numerous benefits, they also introduce new challenges:

1. Container Orchestration Complexity

Managing dozens or hundreds of containers requires orchestration tools like Kubernetes, which have their own learning curves. Start small and gradually adopt more complex patterns as your needs grow.

2. Stateful Applications

Containers are naturally ephemeral, making stateful applications challenging. Use volume mounts, persistent storage solutions, or database containers designed for persistence.

3. Security Concerns

Container security requires attention to image scanning, runtime protection, and access controls. Follow security best practices like using minimal base images, scanning for vulnerabilities, and implementing least privilege principles.

4. Monitoring and Observability

Traditional monitoring tools may not work well with containerized applications. Use container-aware monitoring solutions that understand the dynamic nature of containers and can track ephemeral instances.

The Future of Containers

Containers continue to evolve, with several trends shaping their future:

- WebAssembly (WASM) offering even lighter alternatives for some workloads

- Serverless containers removing the need to manage infrastructure

- eBPF-based security providing deeper visibility into container activities

- AI-powered container orchestration optimizing resource allocation

As these technologies mature, containers will become even more powerful tools in the DevOps arsenal.

Conclusion: Embracing the Container Paradigm

Containers have transformed application development and deployment by solving the age-old problem of environment inconsistency. They've enabled faster development cycles, more reliable deployments, and better resource utilization—all critical components of modern DevOps practices.

Whether you're just starting your DevOps journey or looking to optimize existing processes, containers provide a foundation for building more efficient, reliable, and scalable applications. By understanding why containers matter and how they fit into the DevOps ecosystem, you're better equipped to leverage their power in your own projects.

Ready to dive deeper into DevOps concepts? Check out our guide to CI/CD pipelines or learn about Docker Compose for multi-service applications.

2 Comments

Your comment is awaiting moderation.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you. https://accounts.binance.info/en/register?ref=JHQQKNKN

Your comment is awaiting moderation.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

[…] more DevOps learning resources, check out our other guides on Docker, Git, and CI/CD […]

[…] you've been following our series on Docker containers and CI/CD pipelines, IaC is the missing piece that ties everything together in modern […]